What Is Data Scraping?

- Google Chrome Tool

- Web Scraping Tools Chrome Browser

- Google Chrome Removal Tools

- Web Scraping Tools Open Source

Data scraping, also known as web scraping, is the process of importing information from a website into a spreadsheet or local file saved on your computer. It’s one of the most efficient ways to get data from the web, and in some cases to channel that data to another website. Popular uses of data scraping include:

How to use Web Scraper? There are only a couple of steps you will need to learn in order to master web scraping: 1. Install Web Scraper and open Web Scraper tab in developer tools (which has to be. Webscraper.io is a web scraping tool provider with a Chrome browser extension and a Firefox add-on. The webScraper.io Chrome extension is one of the best web scrapers you can install as a Chrome extension. With over 300,000 downloads – and impressive customer reviews in the store, this extension is a must-have for web scrapers.

- Research for web content/business intelligence

- Pricing for travel booker sites/price comparison sites

- Finding sales leads/conducting market research by crawling public data sources (e.g. Yell and Twitter)

- Sending product data from an e-commerce site to another online vendor (e.g. Google Shopping)

And that list’s just scratching the surface. Data scraping has a vast number of applications – it’s useful in just about any case where data needs to be moved from one place to another.

The basics of data scraping are relatively easy to master. Let’s go through how to set up a simple data scraping action using Excel.

Data Scraping with dynamic web queries in Microsoft Excel

Setting up a dynamic web query in Microsoft Excel is an easy, versatile data scraping method that enables you to set up a data feed from an external website (or multiple websites) into a spreadsheet.

Watch this excellent tutorial video to learn how to import data from the web to Excel – or, if you prefer, use the written instructions below:

- Open a new workbook in Excel

- Click the cell you want to import data into

- Click the ‘Data’ tab

- Click ‘Get external data’

- Click the ‘From web’ symbol

- Note the little yellow arrows that appear to the top-left of web page and alongside certain content

- Paste the URL of the web page you want to import data from into the address bar (we recommend choosing a site where data is shown in tables)

- Click ‘Go’

- Click the yellow arrow next to the data you wish to import

- Click ‘Import’

- An ‘Import data’ dialogue box pops up

- Click ‘OK’ (or change the cell selection, if you like)

If you’ve followed these steps, you should now be able to see the data from the website set out in your spreadsheet.

The great thing about dynamic web queries is that they don’t just import data into your spreadsheet as a one-off operation – they feed it in, meaning the spreadsheet is regularly updated with the latest version of the data, as it appears on the source website. That’s why we call them dynamic.

To configure how regularly your dynamic web query updates the data it imports, go to ‘Data’, then ‘Properties’, then select a frequency (“Refresh every X minutes”).

Automated data scraping with tools

Getting to grips with using dynamic web queries in Excel is a useful way to gain an understanding of data scraping. However, if you intend to use data regularly scraping in your work, you may find a dedicated data scraping tool more effective.

Here are our thoughts on a few of the most popular data scraping tools on the market:

Data Scraper (Chrome plugin)

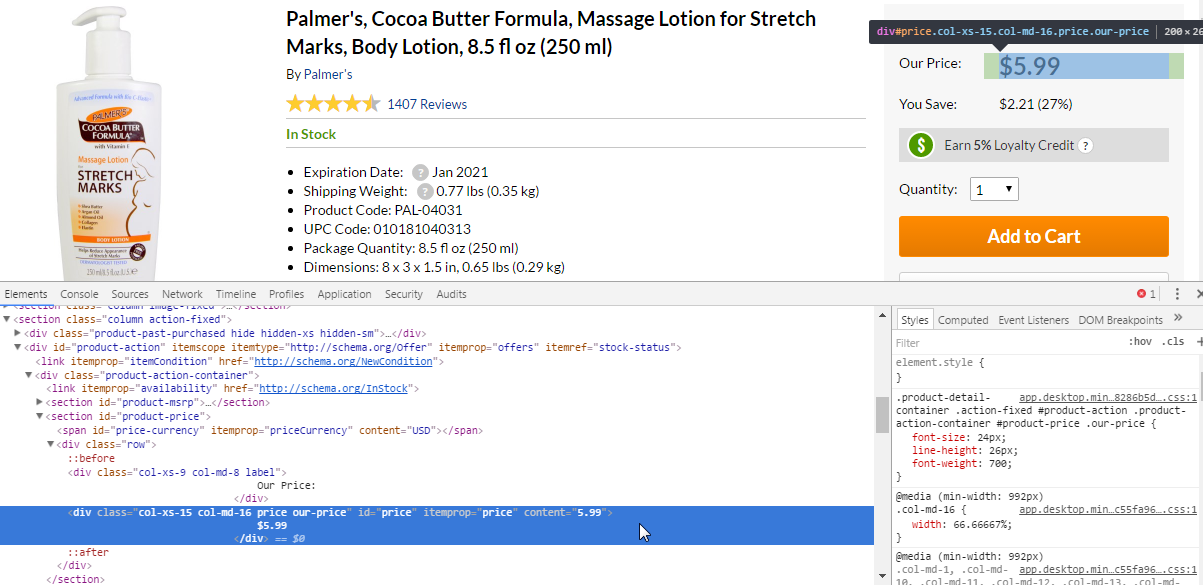

Data Scraper slots straight into your Chrome browser extensions, allowing you to choose from a range of ready-made data scraping “recipes” to extract data from whichever web page is loaded in your browser.

This tool works especially well with popular data scraping sources like Twitter and Wikipedia, as the plugin includes a greater variety of recipe options for such sites.

We tried Data Scraper out by mining a Twitter hashtag, “#jourorequest”, for PR opportunities, using one of the tool’s public recipes. Here’s a flavour of the data we got back:

As you can see, the tool has provided a table with the username of every account which had posted recently on the hashtag, plus their tweet and its URL

Having this data in this format would be more useful to a PR rep than simply seeing the data in Twitter’s browser view for a number of reasons:

- It could be used to help create a database of press contacts

- You could keep referring back to this list and easily find what you’re looking for, whereas Twitter continuously updates

- The list is sortable and editable

- It gives you ownership of the data – which could be taken offline or changed at any moment

We’re impressed with Data Scraper, even though its public recipes are sometimes slightly rough-around-the-edges. Try installing the free version on Chrome, and have a play around with extracting data. Be sure to watch the intro movie they provide to get an idea of how the tool works and some simple ways to extract the data you want.

WebHarvy

WebHarvy is a point-and-click data scraper with a free trial version. Its biggest selling point is its flexibility – you can use the tool’s in-built web browser to navigate to the data you would like to import, and can then create your own mining specifications to extract exactly what you need from the source website.

import.io

Import.io is a feature-rich data mining tool suite that does much of the hard work for you. Has some interesting features, including “What’s changed?” reports that can notify you of updates to specified websites – ideal for in-depth competitor analysis.

How are marketers using data scraping?

As you will have gathered by this point, data scraping can come in handy just about anywhere where information is used. Here are some key examples of how the technology is being used by marketers:

Gathering disparate data

One of the great advantages of data scraping, says Marcin Rosinski, CEO of FeedOptimise, is that it can help you gather different data into one place. “Crawling allows us to take unstructured, scattered data from multiple sources and collect it in one place and make it structured,” says Marcin. “If you have multiple websites controlled by different entities, you can combine it all into one feed.

“The spectrum of use cases for this is infinite.”

FeedOptimise offers a wide variety of data scraping and data feed services, which you can find out about at their website.

Expediting research

The simplest use for data scraping is retrieving data from a single source. If there’s a web page that contains lots of data that could be useful to you, the easiest way to get that information onto your computer in an orderly format will probably be data scraping.

Try finding a list of useful contacts on Twitter, and import the data using data scraping. This will give you a taste of how the process can fit into your everyday work.

Outputting an XML feed to third party sites

Feeding product data from your site to Google Shopping and other third party sellers is a key application of data scraping for e-commerce. It allows you to automate the potentially laborious process of updating your product details – which is crucial if your stock changes often.

“Data scraping can output your XML feed for Google Shopping,” says Target Internet’s Marketing Director, Ciaran Rogers. “ I have worked with a number of online retailers retailer who were continually adding new SKU’s to their site as products came into stock. If your E-commerce solution doesn’t output a suitable XML feed that you can hook up to your Google Merchant Centre so you can advertise your best products that can be an issue. Often your latest products are potentially the best sellers, so you want to get them advertised as soon as they go live. I’ve used data scraping to produce up-to-date listings to feed into Google Merchant Centre. It’s a great solution, and actually, there is so much you can do with the data once you have it. Using the feed, you can tag the best converting products on a daily basis so you can share that information with Google Adwords and ensure you bid more competitively on those products. Once you set it up its all quite automated. The flexibility a good feed you have control of in this way is great, and it can lead to some very definite improvements in those campaigns which clients love.”

It’s possible to set up a simple data feed into Google Merchant Centre for yourself. Here’s how it’s done:

How to set up a data feed to Google Merchant Centre

Using one of the techniques or tools described previously, create a file that uses a dynamic website query to import the details of products listed on your site. This file should automatically update at regular intervals.

The details should be set out as specified here.

- Upload this file to a password-protected URL

- Go to Google Merchant Centre and log in (make sure your Merchant Centre account is properly set up first)

- Go to Products

- Click the plus button

- Enter your target country and create a feed name

- Select the ‘scheduled fetch’ option

- Add the URL of your product data file, along with the username and password required to access it

- Select the fetch frequency that best matches your product upload schedule

- Click Save

- Your product data should now be available in Google Merchant Centre. Just make sure you Click on the ‘Diagnostics’ tab to check it’s status and ensure it’s all working smoothly.

The dark side of data scraping

There are many positive uses for data scraping, but it does get abused by a small minority too.

The most prevalent misuse of data scraping is email harvesting – the scraping of data from websites, social media and directories to uncover people’s email addresses, which are then sold on to spammers or scammers. In some jurisdictions, using automated means like data scraping to harvest email addresses with commercial intent is illegal, and it is almost universally considered bad marketing practice.

Many web users have adopted techniques to help reduce the risk of email harvesters getting hold of their email address, including:

- Address munging: changing the format of your email address when posting it publicly, e.g. typing ‘patrick[at]gmail.com’ instead of ‘patrick@gmail.com’. This is an easy but slightly unreliable approach to protecting your email address on social media – some harvesters will search for various munged combinations as well as emails in a normal format, so it’s not entirely airtight.

- Contact forms: using a contact form instead of posting your email address(es) on your website.

- Images: if your email address is presented in image form on your website, it will be beyond the technological reach of most people involved in email harvesting.

The Data Scraping Future

Google Chrome Tool

Whether or not you intend to use data scraping in your work, it’s advisable to educate yourself on the subject, as it is likely to become even more important in the next few years.

There are now data scraping AI on the market that can use machine learning to keep on getting better at recognising inputs which only humans have traditionally been able to interpret – like images.

Big improvements in data scraping from images and videos will have far-reaching consequences for digital marketers. As image scraping becomes more in-depth, we’ll be able to know far more about online images before we’ve seen them ourselves – and this, like text-based data scraping, will help us do lots of things better.

Web Scraping Tools Chrome Browser

Then there’s the biggest data scraper of all – Google. The whole experience of web search is going to be transformed when Google can accurately infer as much from an image as it can from a page of copy – and that goes double from a digital marketing perspective.

If you’re in any doubt over whether this can happen in the near future, try out Google’s image interpretation API, Cloud Vision, and let us know what you think.

Google Chrome Removal Tools

get your free membership now - absolutely no credit card required

- The Digital Marketing Toolkit

- Exclusive live video learning sessions

- Complete library of The Digital Marketing Podcast

- The digital skills benchmarking tools

- Free online training courses

Web Scraping Tools Open Source

FREE MEMBERSHIP